In the next 5 minutes, you'll learn how to use OpenAI's Batch API to get a massive discount on all of your OpenAI API calls. We're going to make a call to OpenAI's Batch API with no code.

OpenAI has recently announced their Batch API. See the API reference on the official OpenAI website. The Batch API allows you to upload prompts in a batch format, and asynchronously get responses to all of those prompts over the course of up to 24 hours.

But the best part of the Batch API is the lucrative discount. OpenAI's Batch API offers a 50% off discount compared to their standard API.

The batch format: JSONL

All of the inputs and outputs from OpenAI's Batch API use the JSONL format.

The JSONL format is basically a file that has a JSON object on each new line.

You can read more about the JSON format at jsonlines.org.

But to keep things short, here's an example of what the input and output look like:

input-batch.jsonl

{"custom_id":"task-0","method":"POST","url":"/v1/chat/completions","body":{"model":"gpt-4o","temperature":0,"messages":[{"role":"user","content":"Tell me a random fact."}]}}

{"custom_id":"task-1","method":"POST","url":"/v1/chat/completions","body":{"model":"gpt-4o","temperature":0,"messages":[{"role":"user","content":"What is 2+2?"}]}}

{"custom_id":"task-2","method":"POST","url":"/v1/chat/completions","body":{"model":"gpt-4o","temperature":0,"messages":[{"role":"user","content":"List the first few prime numbers"}]}}output-batch.jsonl

{"id": "batch_req_ve6HPNA8GOMvgFc8mjKSky0E", "custom_id": "task-0", "response": {"status_code": 200, "request_id": "e3ed878b03cead9df80a739807f00aed", "body": {"id": "chatcmpl-9exuPN7RRtCs7poS2zgusCYnbo1WH", "object": "chat.completion", "created": 1719550437, "model": "gpt-4o-2024-05-13", "choices": [{"index": 0, "message": {"role": "assistant", "content": "Sure! Did you know that honey never spoils? Archaeologists have found pots of honey in ancient Egyptian tombs that are over 3,000 years old and still perfectly edible. Honey's low moisture content and acidic pH create an environment that's inhospitable to bacteria and microorganisms, allowing it to last indefinitely."}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 13, "completion_tokens": 64, "total_tokens": 77}, "system_fingerprint": "fp_ce0793330f"}}, "error": null}

{"id": "batch_req_wQBQIRY2g6Nw19zSiBB4Uvuj", "custom_id": "task-1", "response": {"status_code": 200, "request_id": "5b0176756e5c7eed324e4b075a888528", "body": {"id": "chatcmpl-9exuOO3ynkQ7J7DXHj4i2Ssr69cIV", "object": "chat.completion", "created": 1719550436, "model": "gpt-4o-2024-05-13", "choices": [{"index": 0, "message": {"role": "assistant", "content": "2+2 equals 4."}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 14, "completion_tokens": 7, "total_tokens": 21}, "system_fingerprint": "fp_ce0793330f"}}, "error": null}

{"id": "batch_req_F8xQX1gaChQQ0AB2A0JSGMza", "custom_id": "task-2", "response": {"status_code": 200, "request_id": "dbfc70dcdce2b613a9f2ddfed46b08a7", "body": {"id": "chatcmpl-9exuPRWyMOwG4L70hHEnfNFqDydjM", "object": "chat.completion", "created": 1719550437, "model": "gpt-4o-2024-05-13", "choices": [{"index": 0, "message": {"role": "assistant", "content": "Sure! The first few prime numbers are:\n\n2, 3, 5, 7, 11, 13, 17, 19, 23, 29\n\nPrime numbers are natural numbers greater than 1 that have no positive divisors other than 1 and themselves."}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 13, "completion_tokens": 60, "total_tokens": 73}, "system_fingerprint": "fp_d576307f90"}}, "error": null}Notice the "message" object that shows the responses from ChatGPT.

... "message": {"role": "assistant", "content": "Sure! Did you know that honey never spoils? ...Let's run inference.

We're going to try running inference for a batch ourselves. To save time, we're going to use the input-batch.jsonl premade file. Click here to download it if you haven't done so already.

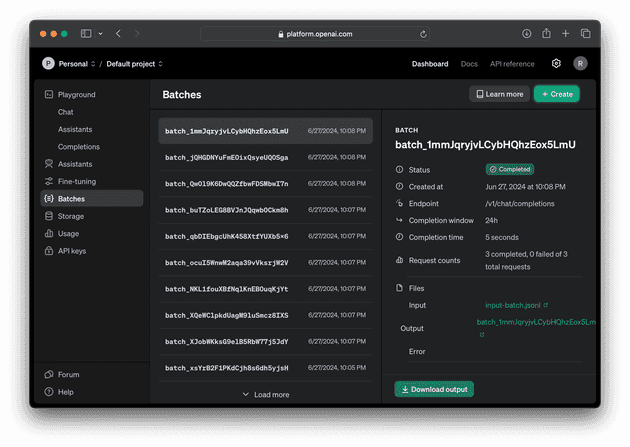

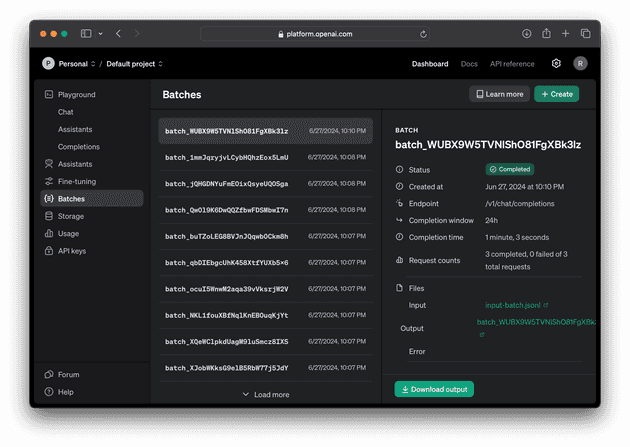

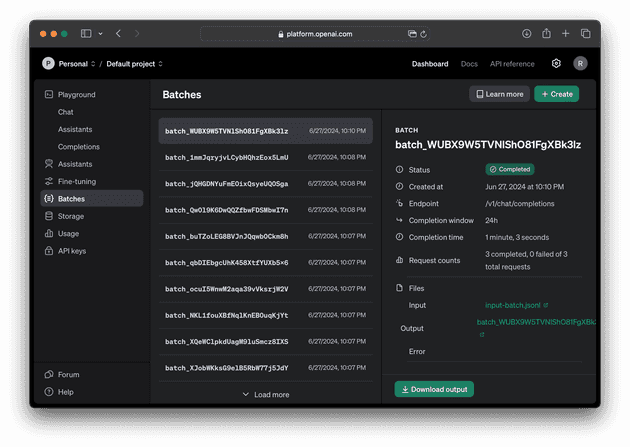

Let's go to OpenAI's Platform – Batches page and create a batch.

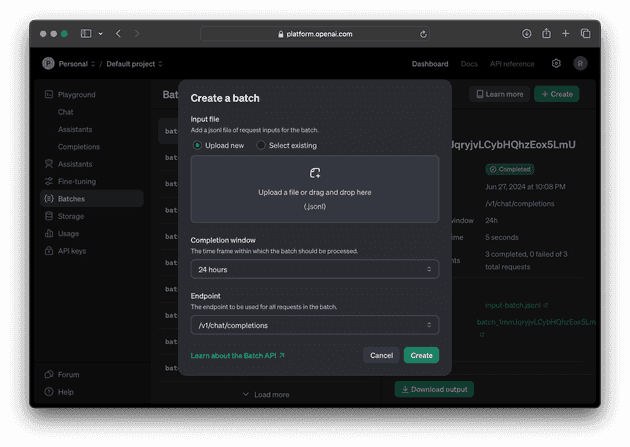

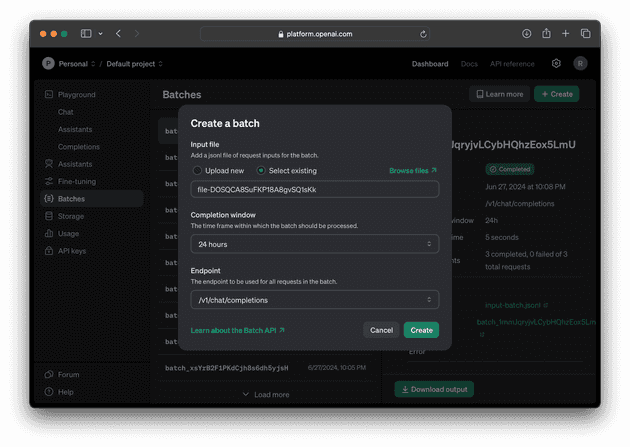

Tap on the green "Create" button in the top right corner. You will see the "Create a batch" window appear.

Drag the input-batch.jsonl file you downloaded earlier onto the file picker.

Tap the "Create" button.

Now, you should see your batch processing. Give it a few moments, while OpenAI claims that this can take up to 24 hours, we've seen small batches of this size typically complete within ~1-2 minutes.

Once your batch is complete, you'll see a big green Download output button. Tap it to download the output of your batch.

Watch out for your browser blocking the download as a pop-up, in my case Safari does, you can click in the URL bar to allow the download.

And that's it! Let's look at the output of our batch.

The output of a batch

Use a text editor like VSCode to open up the .jsonl file that was downloaded. You'll see a file of line-separated JSON blobs.

batch_WUBX9W5TVNlShO81FgXBk3lz_output.jsonl

{"id": "batch_req_ve6HPNA8GOMvgFc8mjKSky0E", "custom_id": "task-0", "response": {"status_code": 200, "request_id": "e3ed878b03cead9df80a739807f00aed", "body": {"id": "chatcmpl-9exuPN7RRtCs7poS2zgusCYnbo1WH", "object": "chat.completion", "created": 1719550437, "model": "gpt-4o-2024-05-13", "choices": [{"index": 0, "message": {"role": "assistant", "content": "Sure! Did you know that honey never spoils? Archaeologists have found pots of honey in ancient Egyptian tombs that are over 3,000 years old and still perfectly edible. Honey's low moisture content and acidic pH create an environment that's inhospitable to bacteria and microorganisms, allowing it to last indefinitely."}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 13, "completion_tokens": 64, "total_tokens": 77}, "system_fingerprint": "fp_ce0793330f"}}, "error": null}

{"id": "batch_req_wQBQIRY2g6Nw19zSiBB4Uvuj", "custom_id": "task-1", "response": {"status_code": 200, "request_id": "5b0176756e5c7eed324e4b075a888528", "body": {"id": "chatcmpl-9exuOO3ynkQ7J7DXHj4i2Ssr69cIV", "object": "chat.completion", "created": 1719550436, "model": "gpt-4o-2024-05-13", "choices": [{"index": 0, "message": {"role": "assistant", "content": "2+2 equals 4."}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 14, "completion_tokens": 7, "total_tokens": 21}, "system_fingerprint": "fp_ce0793330f"}}, "error": null}

{"id": "batch_req_F8xQX1gaChQQ0AB2A0JSGMza", "custom_id": "task-2", "response": {"status_code": 200, "request_id": "dbfc70dcdce2b613a9f2ddfed46b08a7", "body": {"id": "chatcmpl-9exuPRWyMOwG4L70hHEnfNFqDydjM", "object": "chat.completion", "created": 1719550437, "model": "gpt-4o-2024-05-13", "choices": [{"index": 0, "message": {"role": "assistant", "content": "Sure! The first few prime numbers are:\n\n2, 3, 5, 7, 11, 13, 17, 19, 23, 29\n\nPrime numbers are natural numbers greater than 1 that have no positive divisors other than 1 and themselves."}, "logprobs": null, "finish_reason": "stop"}], "usage": {"prompt_tokens": 13, "completion_tokens": 60, "total_tokens": 73}, "system_fingerprint": "fp_d576307f90"}}, "error": null}

And it's that easy. Let's sum up this process into 5 easy steps.

- Create an input .jsonl file containing each prompt.

- Upload your .jsonl file to OpenAI.

- Create a batch using the .jsonl file.

- Wait for the batch to process (up to 24 hours, but sometimes minutes).

- Download the output .jsonl file.

Easily make input .jsonl files.

Do you want to use the Batch API for yourself without having to write any code?

You can use Batchmon's prompt generator tool to easily create batch prompts from a CSV file.

The tool is completely free, it outputs the exact .jsonl format you need for OpenAI's Batch API. Then all you have to do is repeat the steps we did on this page to get your output.